In this article, we explore the technology behind AI avatars, their potential business applications, and how they perform in real-time environments. A key challenge in virtual communication today is achieving a sense of copresence, where participants feel as if they are physically in the same space. Current avatar technologies fall short, often using stylized, cartoon-like figures that fail to convey a user’s true likeness, emotions, or personality. This limitation hinders the feeling of genuine connection during virtual interactions.

AI Avatars

Experiment

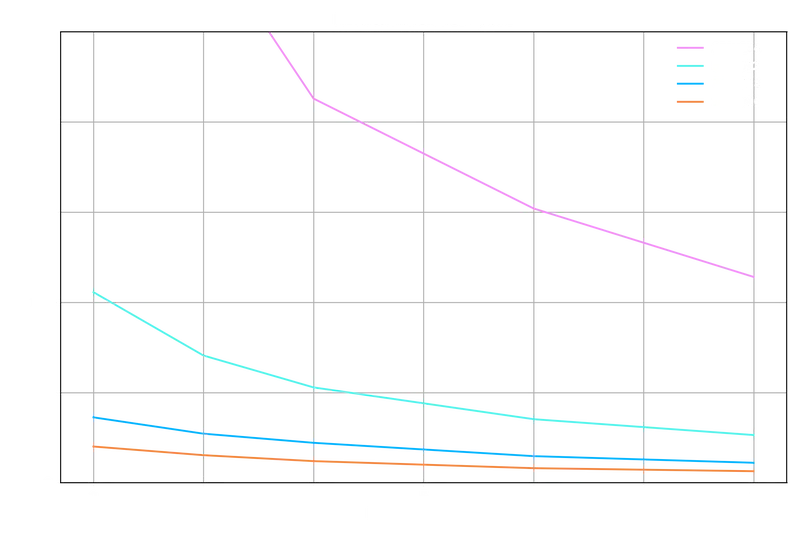

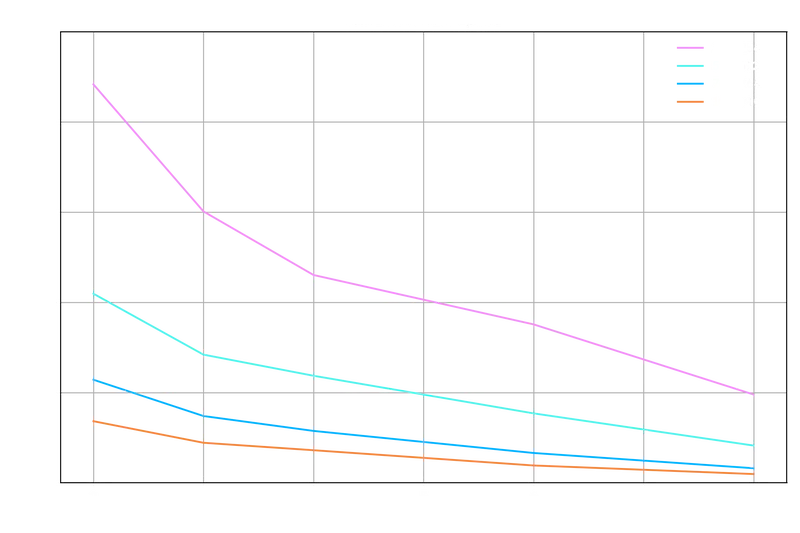

The experiments focused on measuring how resolution and the number of avatars impact performance. We tested 2 to 8 AI avatars with resolutions of 256, 512, 768, and 1024 pixels. Video conferencing guidelines suggest framing the face and upper chest, which translates into a 50–70% screen occupation (Georgetown University, 2021; Townsend, 2020). We used 50% screen occupation for our calculations.

For common video resolutions, this results in the following sizes for each participant:

| Participants | 720p (1280x720) | 1080p (1920x1080) | 4K (3840x2160) |

|---|---|---|---|

| 2 | 320 x 360 | 480 x 540 | 960 x 1080 |

| 3 | 214 x 360 | 320 x 540 | 640 x 1080 |

| 4 | 320 x 180 | 480 x 270 | 960 x 540 |

| 5 | 256 x 180 | 384 x 270 | 768 x 540 |

| 6 | 214 x 180 | 320 x 270 | 640 x 540 |

| 7 | 183 x 180 | 274 x 270 | 549 x 540 |

| 8 | 160 x 180 | 240 x 270 | 480 x 540 |

We conducted these measurements using an Apple M1 Mac, and future iterations of the technology will benefit from faster Apple Silicon chips, such as those used in the Vision Pro, which is poised to be a key platform in the near future.

Results

Typical video conferencing tools run at 30 frames per second (fps) or lower (Umbdenstock, 2021). Our results show that up to 8 AI avatars can be rendered at acceptable resolutions while maintaining a smooth video conferencing experience. For example, rendering 8 AI avatars at 256x256 resolution yields frame rates as high as 50 fps, sufficient for 720p and 1080p video. In setups with 2–4 participants, 512x512 resolution offered the best balance between quality and performance. For one-on-one interactions, even higher resolutions are feasible.

Conclusion

Our experiments demonstrate that AI avatars can significantly elevate virtual interactions by providing high-fidelity, real-time visual representations. By leveraging GPU rendering pipelines and AI accelerators, businesses can deploy AI avatars in scalable environments, ranging from remote work and virtual events to customer service and gaming. This technology has the potential to transform industries reliant on real-time virtual communication, opening up new possibilities for collaboration, entertainment, and customer engagement. By efficiently utilizing modern hardware, companies can deliver high-resolution, smooth video conferencing or virtual events with multiple participants, paving the way for more immersive digital experiences.

References

Georgetown University. (2021). Five ways to look and sound better on Zoom. https://www.georgetown.edu/news/five-ways-to-look-and-sound-better-on-zoom/#:~:text=Framing%20Your%20Image,it%20makes%20in%20your%20appearance

Townsend, R. (2020). Video conferencing best practices: 10 tips for video conferencing while working remote and beyond. Sendero Consulting. https://senderoconsulting.com/video-conferencing-best-practices-10-tips-for-video-conferencing-while-working-remote-and-beyond/

Umbdenstock, J. (2021). Zoom vs. Google Meet vs. Microsoft Teams. https://www.joffrey.video/zoom-vs-google-meet-vs-microsoft-teams/